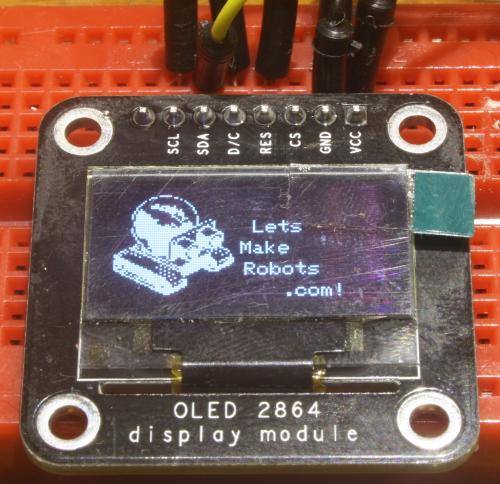

Originally posted on www.letsmakerobots.com

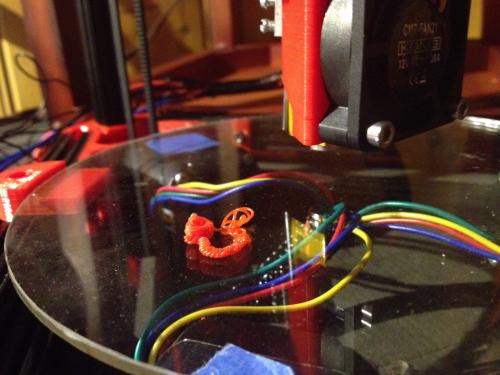

I thought I should give my Kossel a "Robot" page, since Silas asked what the Kossel was, and I told him, "A 3D Printer," to which my precocious son replied, "No, it's a robot."

A lot of the information here is a copy from my build blog, but I've re-thought it's presentation slightly, since there preexist two build guides for the Kossel.

Both are put together by organizations selling Kossel kits. Blokmer's guide is much more detailed and slow paced. Of course, I purchased my ...