I've a robot friend. To be clear, the friend is not a robot, rather, we build robots together. One of the projects we tossed about is building a LEGO sorting machine. Rockets is the friends name--again, not a robot--teaches robotics to kids. For their designs, LEGOs are the primary component. Unfortunately, this results in much time spent to preparing for an event.

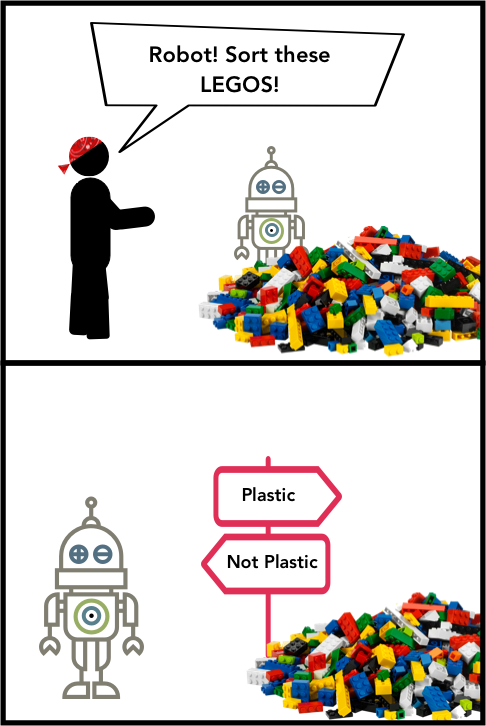

He mentioned to me, "What I really need is a sorting machine." And proceeded to explain his plain for building one.

I was skeptical for some time, but finally, I got drawn in he talked about incorporating a ...