options(java.parameters = "-Xmx14336m") ## memory set to 14 GB

library("sqldf")

library("XLConnect")

library("tcltk")

# 1. Load all PoS

# 2. Load all NBN stays

# 3. Find all PEID's who have had a PoS in the last year

# 4. Find all PEID's of those who have stayed in a NBN bed in the last year

# 5. Append the lists and get a distinct set of PEIDs

# 6. Load family and individual demographic information

# 7. Inner join the demographic data to the distinct PEID set.

# 8. Write all information to file.

# 9. Pray.

allNBN <- readWorksheetFromFile("All TCES NBN Bed Data up to 1-19-2017 -- v04.xlsx", sheet = 1, startRow = 1)

allPoS <- readWorksheetFromFile ("All PoS up to 12-14-16.xlsx", sheet = 1, startRow = 1)

# Make SQL friendly headers

colnames(allNBN)[1] <- "peid"

colnames(allNBN)[3] <- "caseNumber"

colnames(allNBN)[5] <- "firstName"

colnames(allNBN)[6] <- "lastName"

colnames(allNBN)[10] <- "OccupancyStart"

colnames(allNBN)[11] <- "OccupancyEnd"

colnames(allPoS)[1] <- "ServiceName"

colnames(allPoS)[2] <- "peid"

colnames(allPoS)[3] <- "DateOfContact"

# Change dates to by SQL friendly.

allNBN$OccupancyStart <- as.character(allNBN$OccupancyStart)

allNBN$OccupancyEnd <- as.character(allNBN$OccupancyEnd)

allPoS$DateOfContact <- as.character(allPoS$DateOfContact)

# Find the days since the stay in the NBN bed started

allNBN <- sqldf("SELECT *, CAST((julianday('NOW') - julianday(OccupancyStart))AS INTEGER) 'DaysSince'

FROM allNBN

ORDER BY 'DaysSince' DESC")

write.csv(allNBN, "Test.csv")

# Find the days since the PoS was occurred.

allPoS <- sqldf("SELECT *, CAST((julianday('NOW') - julianday(DateOfContact))AS INTEGER) 'DaysSince'

FROM allPoS

ORDER BY 'DaysSince' DESC")

allNBN <- subset(allNBN)

allPoS <- subset(allPoS)

# Get all PEIDs who've stayed in a NBN bed in the last year

activeInNBN <- sqldf("SELECT * FROM allNBN WHERE DaysSince < 366")

# Get all PEIDS who received a Service in the last year

activePoS <- sqldf("SELECT * FROM allPoS WHERE DaysSince < 366")

targetElementsFromActivePoS <- sqldf("SELECT peid, ServiceName, DaysSince, 'PoS' FROM activePoS ORDER BY DaysSince DESC")

targetElementsFromActiveNBN <- sqldf("SELECT peid, caseNumber, firstName, lastName, DaysSince, 'NBN' FROM activeInNBN ORDER BY DaysSince DESC")

# Get all distinct PEIDs.

posPEIDs <- sqldf("SELECT DISTINCT(peid) FROM targetElementsFromActivePoS")

nbnPEIDs <- sqldf("SELECT DISTINCT(peid) FROM targetElementsFromActiveNBN")

# Combine the PEIDs

posAndNbnPEIDs <- rbind(posPEIDs, nbnPEIDs)

# Get de-duplicate.

allPEIDsDistinct <- sqldf("SELECT DISTINCT(peid) FROM posAndNbnPEIDs")

# Load all FAMILY demographic info

allTCESDemographicsFamilies <- readWorksheetFromFile("Demographics in TCES up to 1-24-2017 -- Batch Upload - Participants.xlsx", sheet = 1, startRow = 1)

colnames(allTCESDemographicsFamilies)[1] <- "peid"

# Load all INDIVIDUAL demographic info

allTCESDemographicsIndividuals <- readWorksheetFromFile("Demographics in TCES up to 1-24-2017 -- Batch Upload - Participants.xlsx", sheet = 2, startRow = 1)

colnames(allTCESDemographicsIndividuals)[1] <- "peid"

# Add back demographics

activeThisYearWithDemoFamilies <- sqldf("SELECT * FROM allPEIDsDistinct INNER JOIN allTCESDemographicsFamilies ON allPEIDsDistinct.peid=allTCESDemographicsFamilies.peid")

activeThisYearWithDemoIndividuals <- sqldf("SELECT * FROM allPEIDsDistinct INNER JOIN allTCESDemographicsIndividuals ON allPEIDsDistinct.peid=allTCESDemographicsIndividuals.peid")

write.csv(activeThisYearWithDemoFamilies, file = "Active Families Demographics from TCES.csv")

write.csv(activeThisYearWithDemoIndividuals, file = "Active Individuals Demographics from TCES.csv")Robber Board

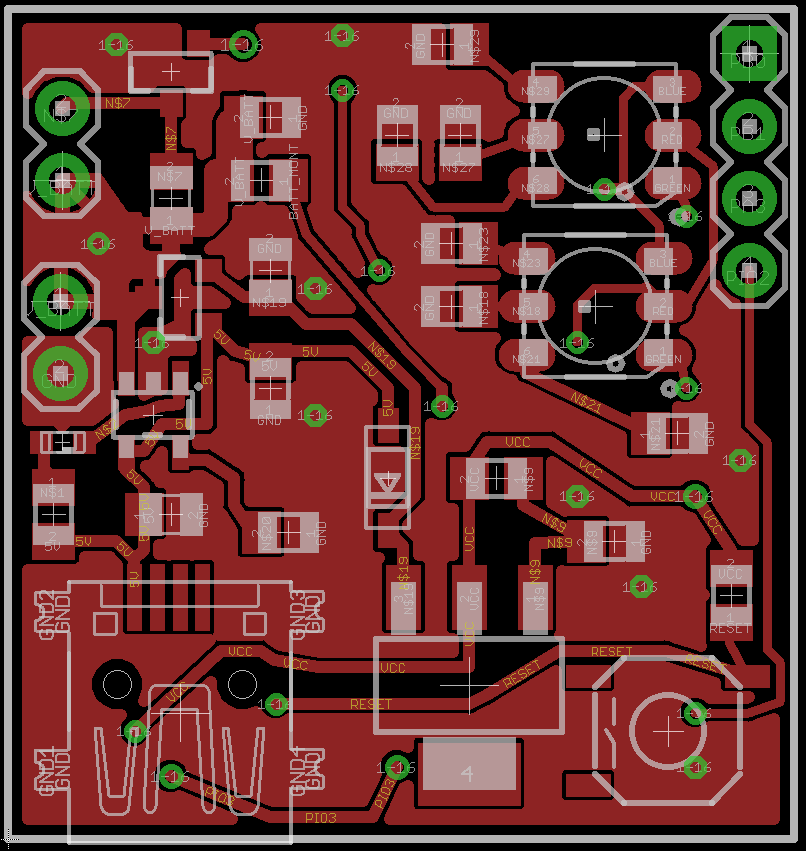

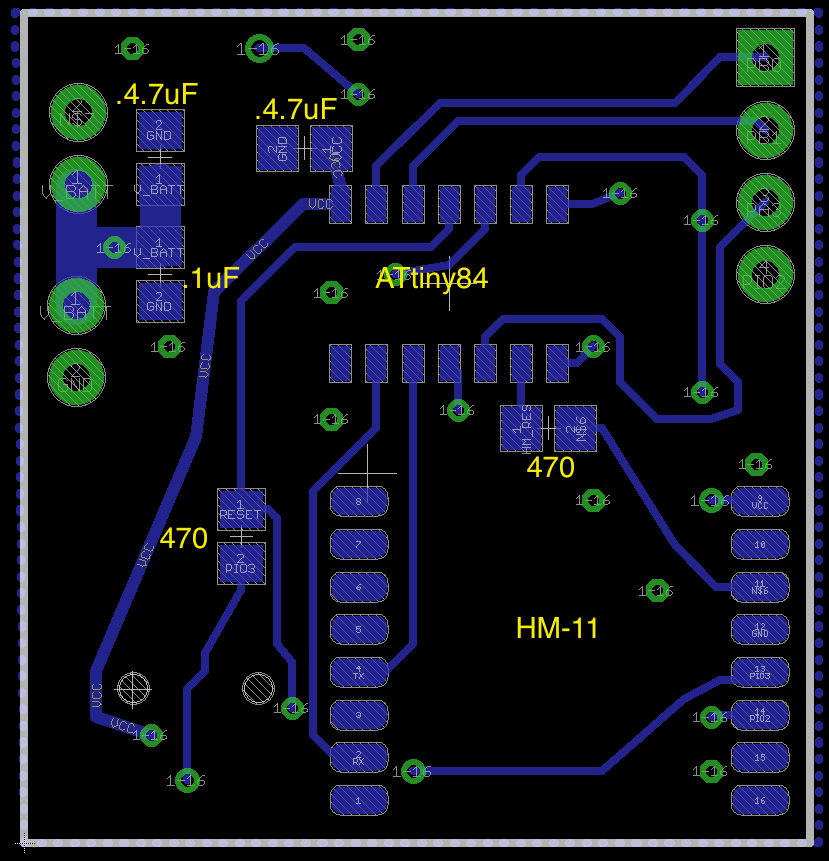

The Robber Board

This board originated with a request from an LPC who was practicing EMDR and wanted to upgrade his feedback machine. He had requested it be wireless and provide both haptic and visual feedback. The whole thing fell apart when he sent me an NDA which seemed typical of real professional level projects. However, it would have prohibited me from sharing anything I discovered, and given the amount I could have made from it, well, it just wasn’t worth it. I thought I’d finish the project and share with everyone, since sharing is the greatest form of payment.

Here are some of the features of the board:

- OTA Uploading to an ATtiny84

- An on board charing circuit with load sharing capability (thanks Zak Kemble)

- Two RGB LEDs to provide visual feedback.

- One unidirectional, motor-driver meant to control a vibration motor.

- Three free pins

My purpose in completing the board is to continue to test my TinySafeBoot BLE uploader:

I’m also in the process of re-writing the uploader to be cross platform, targetting Android, iOS, and Windows 10. It’ll be a feat, but I’m thinking I’ll center the project around Dropbox. The consumer would:

- Compile an AVR binary using Arduino, Atmel Studio, or AVRDude

- Save the binary in Dropbox

- Lumi3 will then connect to the target device, this could be done from Android, iOS, or Windows.

- Lumi3 would then pull the binary from Dropbox and upload it using the TinySafeBootoader.

Not sure if I can pull it off; wish me luck. And feel free to follow the code base here:

Design Info

HM-11 Setup

A few commands which are required to make the OTA process work correctly

- AT+AFTC3FF – This command sets all IO pins to go HIGH after connection. This isn’t needed for OTA but, since the AVR would be pulled low as soon as it connects, any sketch you have running would immediately be shutdown as the AVR’s RESET is pulled low.

- AT+BEFC3FF – This is like the AFTC command, however, it set IO pins HIGH after the HM-11 is powered. For the Robber, if this is not setup the AVR will stay reset until connected. Initially, I didn’t notice this and spent a lot of time trying to figure out what the AVR wouldn’t respond. Sigh.

- AT+MODE2 – this put the HM-11 into “Remote” mode. This will allow AT commands to be sent to the HM-11 after it has been connected, through the BLE connection. This is what allows the commands to be sent to remotely toggle the PIO connected to the AVR’s RESET.

A few commands which I think make the connection more reliable:

- AT+BAUD2 – this sets the communication rate between the HM-11 and AVR to 38400. After testing, this is about the highest speed ATtiny’s can for the TSB auto-baud.

- AT+POWE3 – this raises the radio gain of the HM-11. Power-convseration is not the friend of prototyping.

- AT+GAIN1 – I think this raises the gain on the HM-11’s RX? I’m not sure. The documentation is a little crappy.

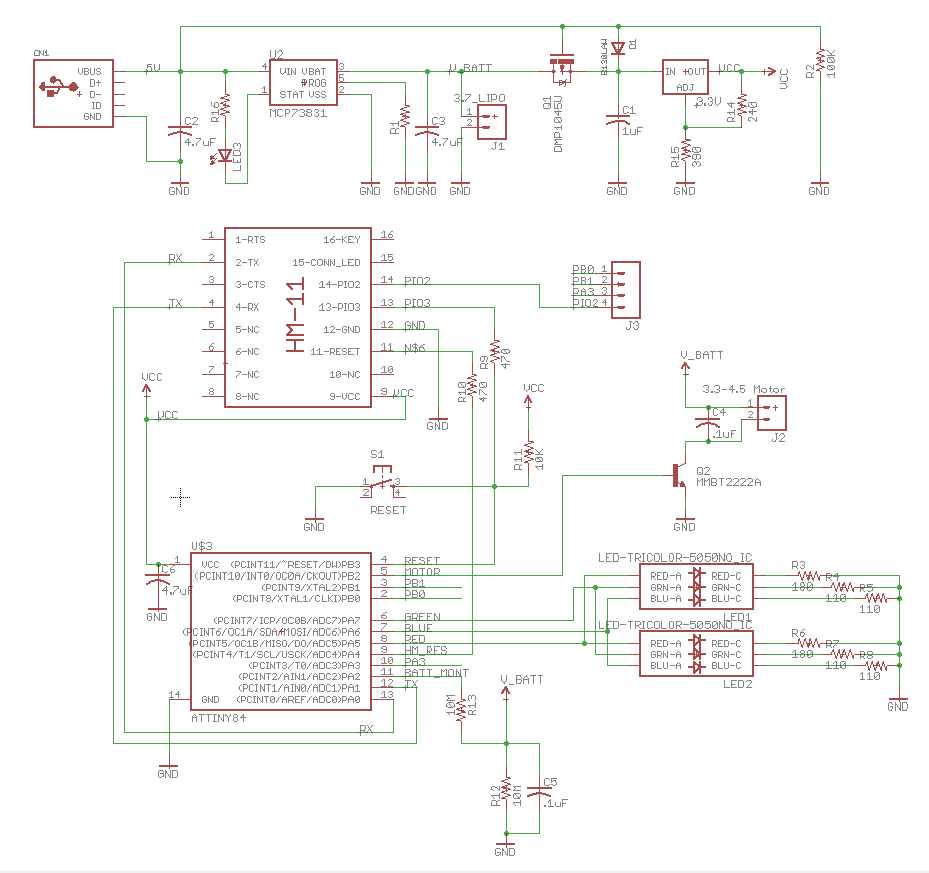

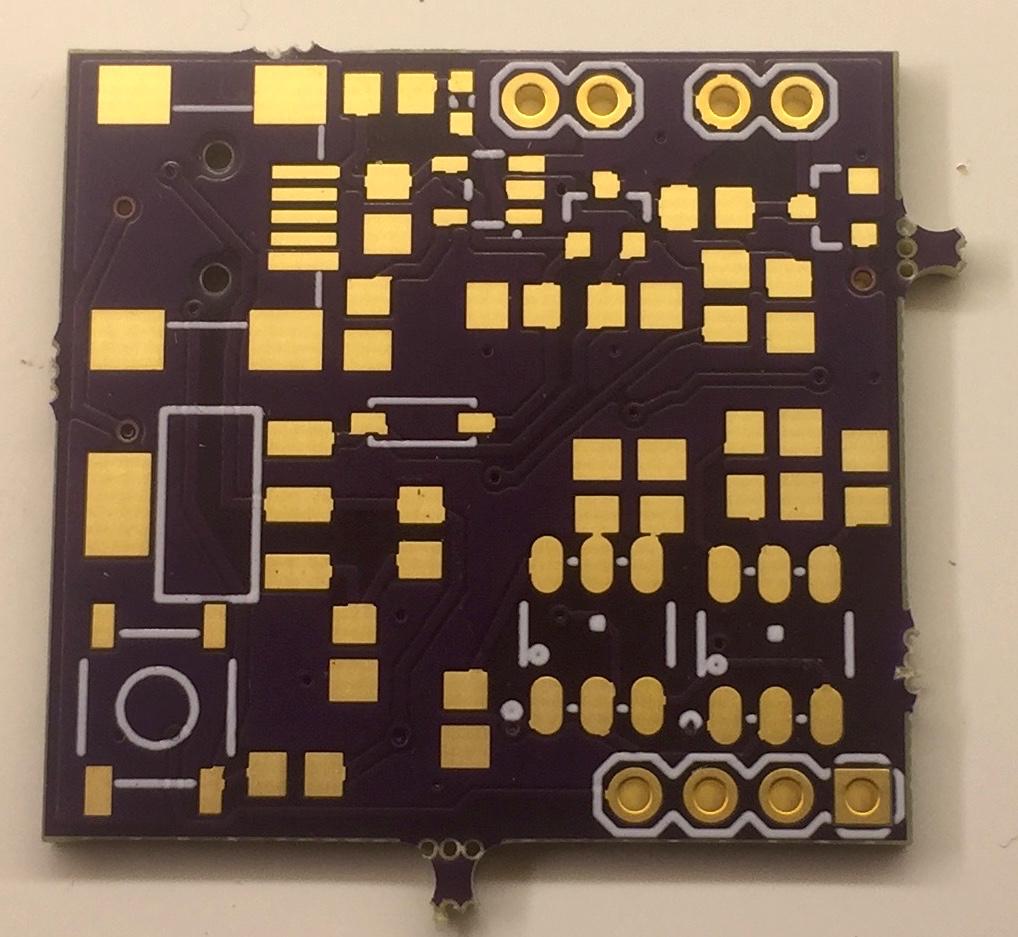

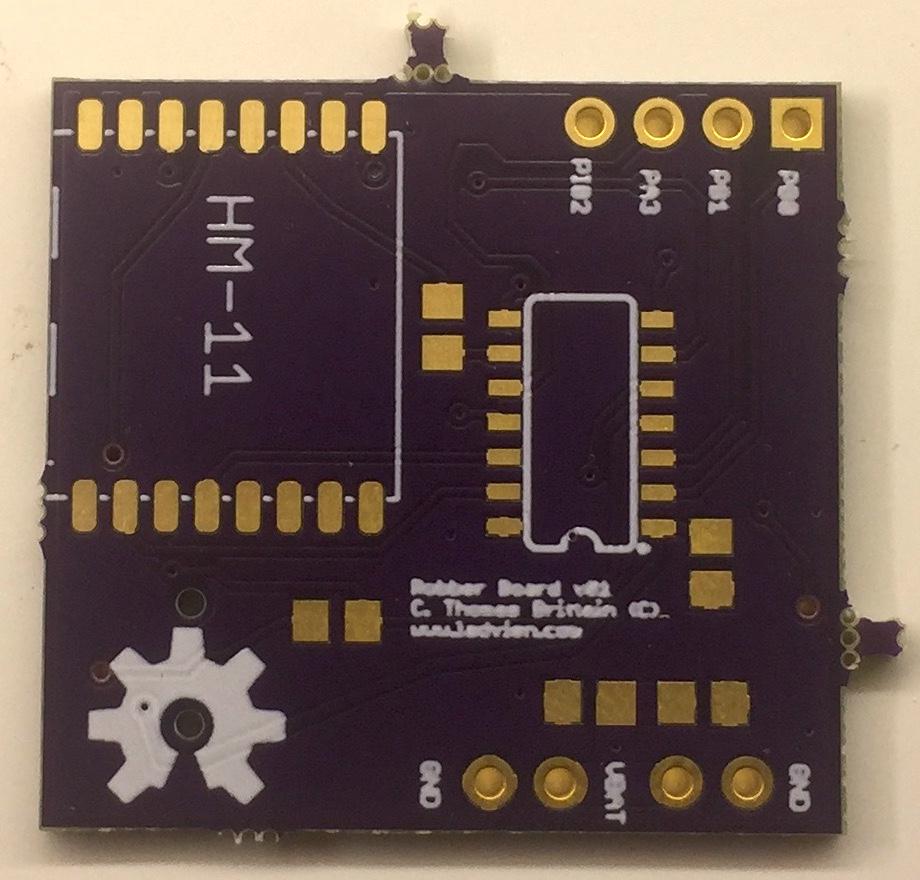

Debugging First Iteration

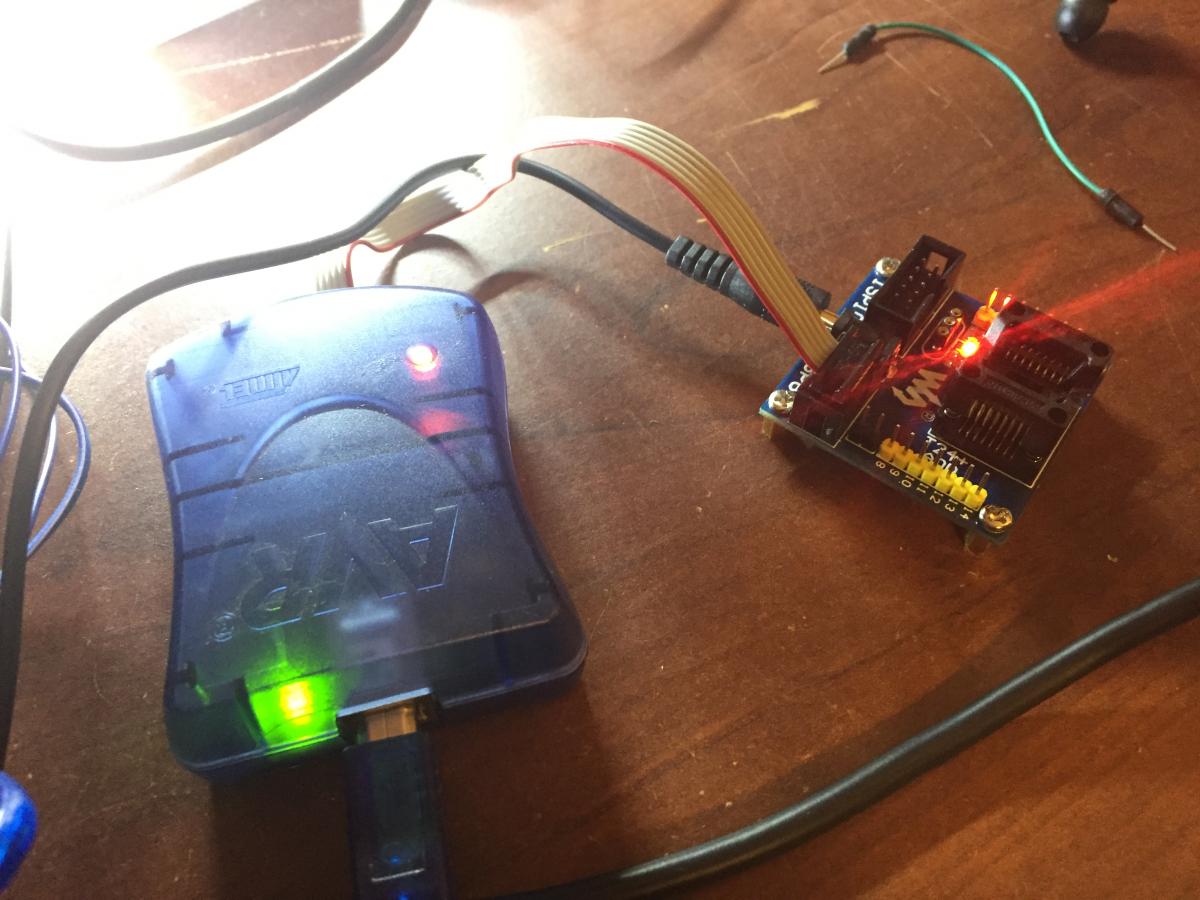

The boards came in from OSHPark. They look sharp–but, it’s time to test the board and see what mistakes were made.

Circuits tested:

| Circuit Abstraction | Tested | Pass | Description of Issues |

| Mini USB | Yes | 100% | |

| MCP73831 | Yes | 100% | Battery Charges. Used a 2k resistor for the charge rate. |

| MCP73831 - LED Charge Indicator | Yes | 100% | |

| Load Sharing | Yes | 100% | Shesh, I love this circuit. Thanks Zak. |

| LM1117 – 3.3v | Yes | 50% | The OUTPUT voltage was calculated based upon 5V INPUT, however, when the USB connection is removed the INPUT voltage is equal to VBAT voltage. In short, the output is ~3.29v when connected to USB and 2.9v when running on battery. This will drop even more when the battery discharges. I’ve selected a fixed VREG to drop in later (NCP186-AMX330TAG). |

| Tacit Reset Switch | Yes | 75% | The reset line was being pulled HIGH with a 10k resistor. This might have been causing issues. It was removed for the time and will be re-added if needed. |

| ATtiny84 – Wireless UART Connection | Yes | 85% | I cannot get the ATtiny84’s TSB bootloader to respond. I’ve tried both wireless TSB HELLO and jumpering directly to the UART. No response. I’m currently waiting on a SOIC-14 ATtiny AVR programmer to arrive to more easily troubleshoot the ATtiny84 chip. One thing I’d like to test is the BROWNOUT fuse setting. It seems like this fuse was set different than usual. Also, testing the UART on the naked chip would be helpful. Update: Ok, not sure what happened to the chip, but apparently I killed it? Regardless, when a new one was programmed and swapped out using a heat gun–the new one worked like a charm. I’ve provided more details below. |

| Battery Voltage Monitor | No | ||

| RGB LEDs | No | ||

| HM-11 Reset of ATtiny84 | Yes | 0% | BAD IDEA! I’ve wasted a lot of time because the program I uploaded was sending the HM-11 RESET pin HIGH and LOW. This made it difficult to re-program, since the HM-11 would lose connection before the upload finished. Dear lord, what was I thinking? |

| Vibration Motor | Yes | 100% | It doesn’t work too well when powered by USB alone. Probably need to get a lower powered vibration motor. Also, I don’t like the wire connections. They get all tangled and pull off. It’d be better with a PCB mounted vibration motor. |

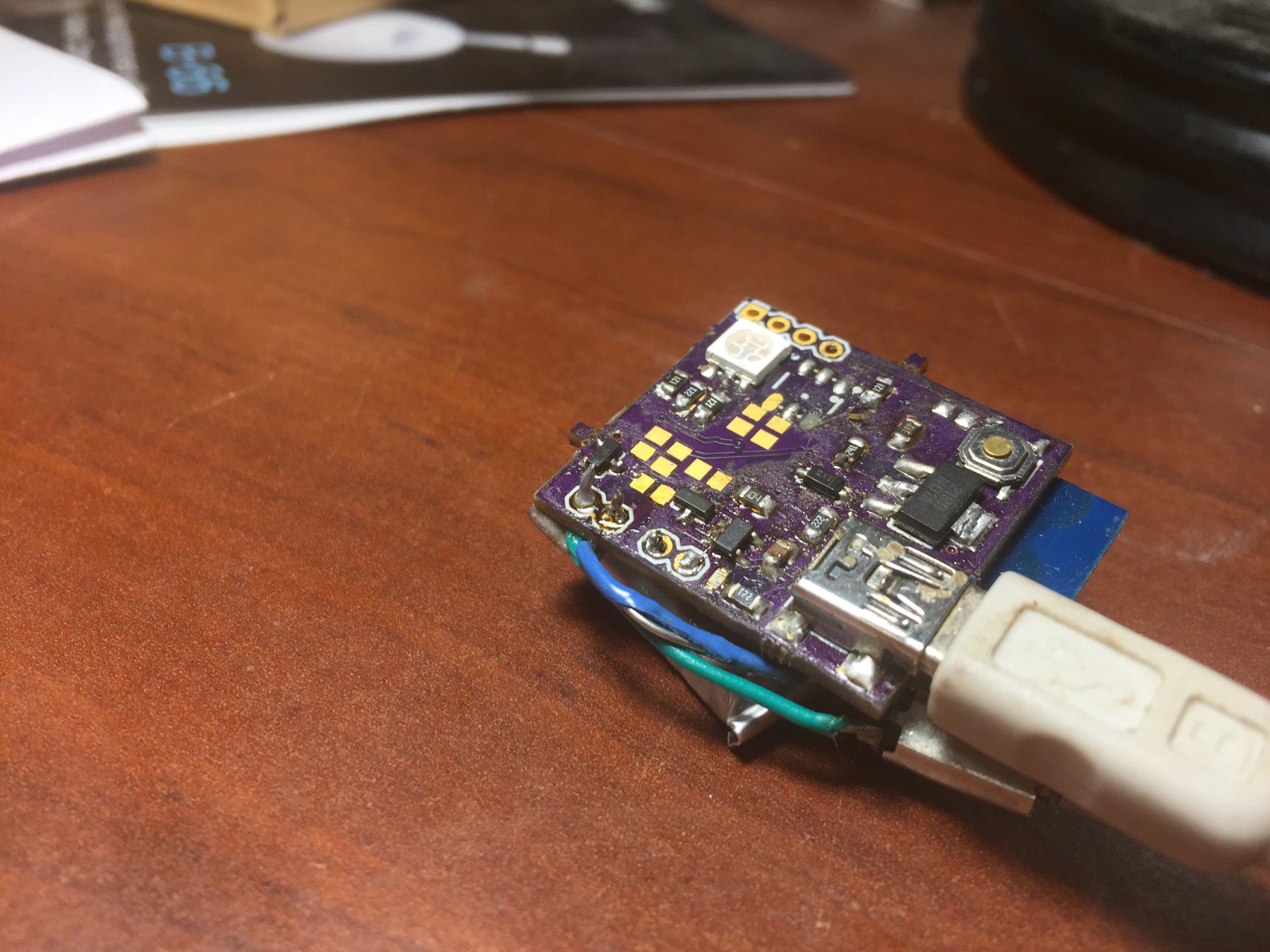

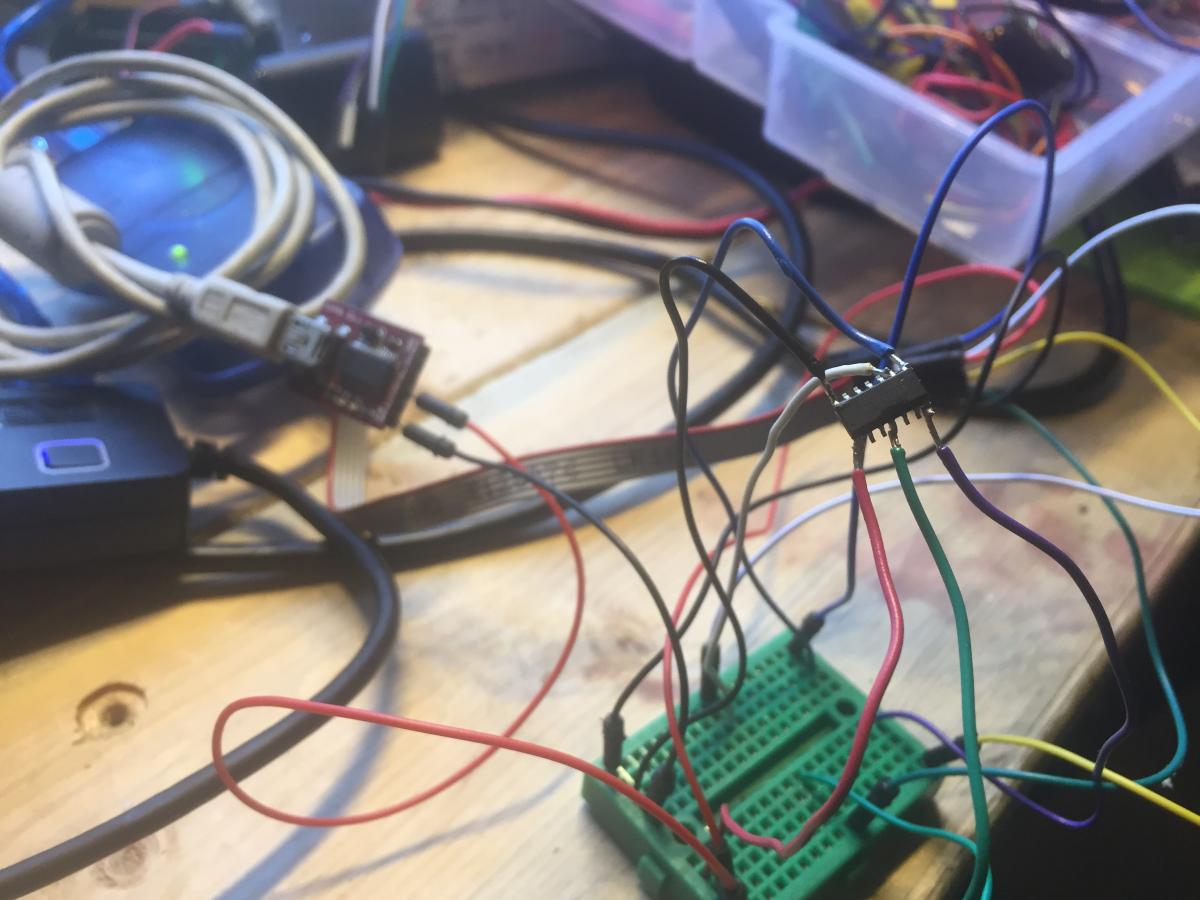

Debugging Wireless UART Connection

When the ATtiny84 and HM-11 combination were tested using Lumi the Robber board failed to handshake. It was disheartening. The connections were double checked, routing was checked, jumper wires soldered on, ATtiny84 fuses checked, even checked the TSB build used. Nothing. Frankly, the reason it was such a problem to troubleshoot was lack of foresight in prototyping. Here are a couple things which should be in every prototype:

- Jumpers. This is especially true if there is a UART connection. They just beg to be miswired. By providing solder-jumpers the UART connection can be tested a device at a time; ATtiny84, then, HM-11

- Test points. Gah, this is a no-brainer, but in inevitably there end up being leads soldered on at the most precarious place.

Back to the Robber board issue. I got wore out trying to do stuff like this:

The wires kept popping off, they’d need to be resoldered, then pop off again. Finally, I broke down and bought a SOIC-14 AVR Programmer. Dear lord, where has this thing been all of my life? Instead of taking two hours of setting up a chip, wiring leads, and programming it, I was done in about 10 minutes. If you like SOIC ATtiny85/84 chips, get one!

Here’s an action shot!

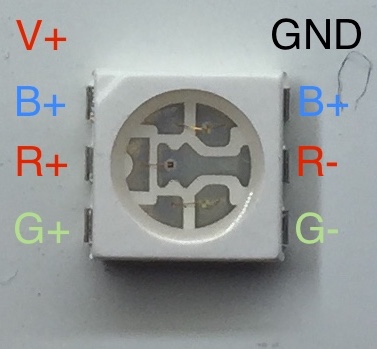

SMD RGB LED

There was trouble testing the SMD RGB LEDs. During the solder-desoldering process the voltage traces for the green LED came off the board. However, this shouldn’t affect the second LED–but for some reason the green channel on the other LED is the only one working properly. The red and blue do not seem to be lighting up.

I thought I’d take a moment and diagram the SMD RGBs I’m using, as I can’t ever seem to get directionality correct.

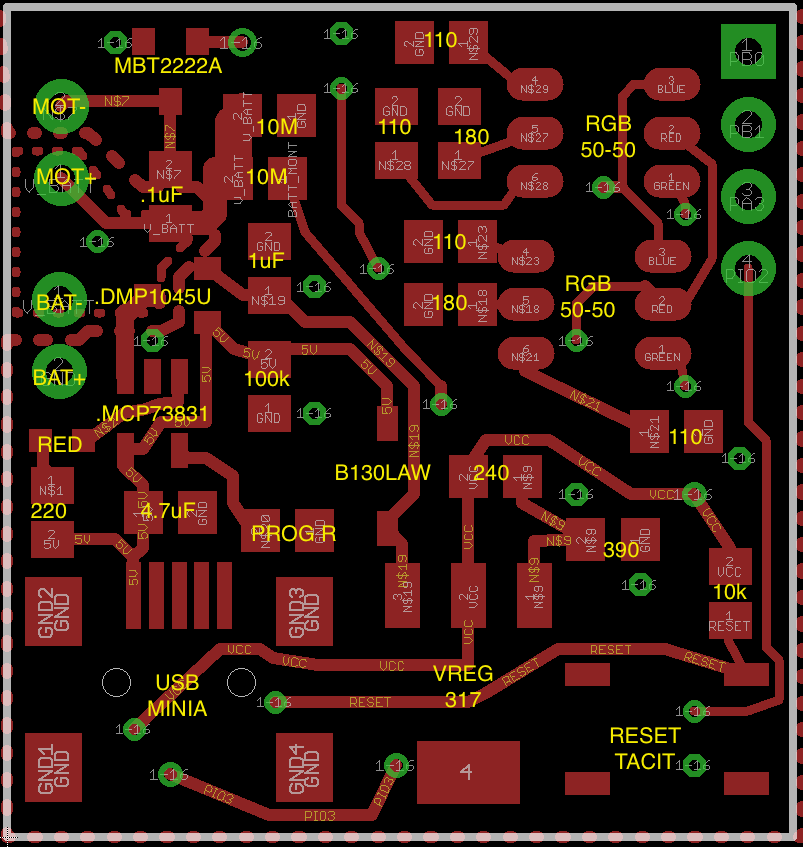

2017-12-24 – v3

I’ve almost finished testing the Robber board v3. A few changes:

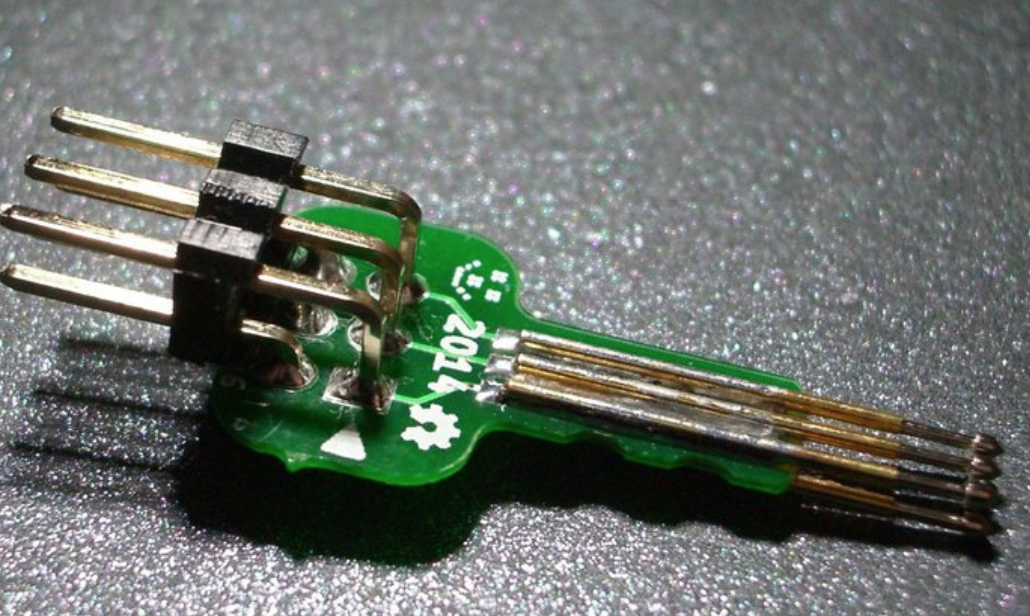

ISP Key

I’ve added a special ISP header to the board. It works with Tiny AVR-ISP pogo-pin programming adapter

It’s a bit of a pain to solder, but it’s pretty darn sweet once it’s in place. Of course, the header is backwards. More on that later.

Shaping and Combining HMIS Data from ETO

Continuing to explore R and SQL’s usefulness when it comes to HMIS data I decided to start posting HMIS problems and R and SQL solutions.

Problem: Our HMIS implementation has had three shelters entering data into one partition. This has been a lot like mixing three different colors of sand into one bucket–much easier to put in then sort out. It is also a problem since HUD requires Emergency Solution Grant (ESG) recipients to provide an annual standardized report, known as the CAPER, on data defined by the HMIS Data Dictionary. These data elements are referred to as Universal Data Elements. With this mixed bucket data error responsibility becomes a big issue. The CAPER only allows up to 25% missing data, which makes data quality important. As for data repair, this should be completed by the agency which created the error. This makes communicating data issues imperative.

Unfortunately, when data from several agencies is mixed ,creating an error report is problematic—at least, for the HMIS software our continuum of care utilizes. The data quality reports our HMIS office produces lumps all errors together. This leads to social loafing between the agencies, in turn, few data repairs.

Solution: The solution seems to sort the data back out, re-assigning it to the respective agency’s data. This would allow data quality reports to assign responsibility of repair. Currently, our COC uses Social Solutions ETO software for manage our HMIS. The process of the moving the data consists of the following steps:

- Determine all data which needs to be migrated. For us, this is Demographic, HUD Assessment, and Bed Stay data.

- Export these data sets.

- Sort the data sets to respective agencies.

- Import the data using a predefined template.

This article focuses on the third step. The data has been exported, but how to sort it?

Below is a script written to take a flat file of HUD Assessments and

- Filter to to the respective program

- Filter HUD Assessments to just Protect Entry

- Repair the COC code (e.g., “tx601” -> “TX-601”)

- Re-assign the data to the proper Site (agency’s data) and Program.

- Chop data into sets of no more than 500 rows, making the import process easier

- Write the data out to files.

It’s pretty hackish, but it worked.

library("sqldf")

library("readxl")

# Script Settings --------------------------------------------------------

# Name of output file, not including CSV extension.

outputFileName <- paste("HUD Assessments to Upload - v01 -")

# Paths to input files.

inputFileNameforHUDAssessments <- paste("TCES HUD Assesment Batch Report of Active Participants on 12-31-2016 - v09.xlsx")

inputFileNameforKeysToMatch <- paste("PEIDs of TCES Active Participants.xlsx")

# Names of target Site and Program

siteName <- "The Salvation Army Mabee Center"

programName <- "TSA Emergency Shelter Check In"

# ----------------------------------------

# Function to split files.

splitDataAndWriteFiles <- function(df, chunkSize, filename) {

success <- FALSE

count <- 0

while (!success) {

# If you want 20 samples, put any range of 20 values within the range of number of rows

s <- paste(((count*chunkSize)+1), " ", ((count+1)*chunkSize))

print(s)

chunk <- subset(df[((count*chunkSize)+1):((count+1)*chunkSize),])

#chunk <- sample(df[5:20,])

## this would contain first 20 rows

fileName <- paste(outputFileName, "_", as.character(count), ".csv")

# Write out all the Active HUD Assessments.

write.csv(chunk, file = fileName, na = "", row.names = FALSE)

count <- count + 1

success <- (count * chunkSize) > nrow(df)

}

return(success)

}

# Load all HUD Data

hudAssRaw <- read_excel(inputFileNameforHUDAssessments, na = "")

hudAssRaw <- subset(hudAssRaw, slect = -NA)

# Re-title columns for easier handling.

colnames(hudAssRaw)[1] <- "peid"

colnames(hudAssRaw)[11] <- "Relation_to_HH"

colnames(hudAssRaw)[12] <- "COC_Code"

# Replaces COC code for head's of household

hudAssRaw$COC_Code[with(hudAssRaw, Relation_to_HH == "Self (head of household)")] <- "TX-601"

hudAssRaw <- subset(hudAssRaw, slect = -NA)

# Subset Project Entry data.

hudAssRaw <- sqldf("SELECT * FROM hudAssRaw WHERE hudAssRaw.'At what point is this data being collected?_2270' = 'Project Entry'")

# Replaces COC code for head's of household

hudAssRaw$'Program Name' <- programName

hudAssRaw$'Site Name' <- siteName

colnames(hudAssRaw)[13] <- "A-3 What is the client's relationship t_2272"

colnames(hudAssRaw)[14] <- "A-5 HUD-assigned CoC code for the clien_2273"

# Set the dates back to YYYY-MM-DD

#hudAssRaw$`Response Date` <- as.Date(hudAssRaw$`Response Date`, "%Y%m%d")

#hudAssRaw$`DOB` <- as.Date(hudAssRaw$`DOB`, "%Y%m%d")

#hudAssRaw$`A-57 Approximate date homelessness star_6115` <- as.Date(hudAssRaw$`A-57 Approximate date homelessness star_6115`, "%Y%m%d")

#hudAssRaw$`A-58 Approximate date homelessness star_6116` <- as.Date(hudAssRaw$`A-58 Approximate date homelessness star_6116`, "%Y%m%d")

hudAssRaw <- subset(hudAssRaw, slect = -NA)

# Get target site Participant IDs

targetSiteParticipantIDs <- read_excel("TSA ESCI Target PSID.xlsx")

assessmentsWithTargetPID <- sqldf("SELECT * FROM targetSiteParticipantIDs

INNER JOIN hudAssRaw

ON hudAssRaw.'Case Number'=targetSiteParticipantIDs.'Case Number'")

# Free up space.

rm(hudAssRaw)

rm(targetSiteParticipantIDs)

assessmentsWithTargetPID <- subset(assessmentsWithTargetPID, slect = -NA)

colnames(assessmentsWithTargetPID)[1] <- "pid"

colnames(assessmentsWithTargetPID)[12] <- "rid"

# INNER JOIN on self to get -only- the first HUD Assessment

# Thanks SO! http://stackoverflow.com/questions/7745609/sql-select-only-rows-with-max-value-on-a-column

assessmentsWithTargetPID <- sqldf("SELECT *

FROM assessmentsWithTargetPID a

INNER JOIN (

SELECT pid, MIN(rid) rid

FROM assessmentsWithTargetPID

GROUP BY pid

) b ON a.pid = b.pid AND a.rid = b.rid

")

# Remove PEID

assessmentsWithTargetPID <- subset(assessmentsWithTargetPID, select = -c(peid,peid.1))

write.csv(assessmentsWithTargetPID, file = "First HUD Entry Assessments for ESCI.csv", na = "", row.names = FALSE)

# Split the data into chunks and write to files.

splitDataAndWriteFiles(activeEntryAssessments, 500)R Function to Split CSVs

This is an R function written to split a dataset into particular sized sets, then write them as a CSV. Often, our office is need a quick way to split files for uploading purposes, since our HMIS software doesn’t handle large uploads well.

For example:

splitDataAndWriteFiles(df, 500, "My_Data") Will produce X number of files named “My_data_X.csv”

options(java.parameters = "-Xmx14336m") ## memory set to 14 GB

library("XLConnect")

# Function to split files.

splitDataAndWriteFiles <- function(df, chunkSize, nameOfFiles) {

success <- FALSE

count <- 0

while (!success) {

# If you want 20 samples, put any range of 20 values within the range of number of rows

s <- paste(((count*chunkSize)+1), "_", ((count+1)*chunkSize))

print(s)

chunk <- subset(df[((count*chunkSize)+1):((count+1)*chunkSize),])

#chunk <- sample(df[5:20,])

## this would contain first 20 rows

fileName <- paste(nameOfFiles, "_", as.character(count), ".csv")

# Write out all the Active HUD Assessments.

write.csv(chunk, file = fileName, na = "", row.names = FALSE, fileEncoding = "utf8")

count <- count + 1

success <- (count * chunkSize) > nrow(df)

}

return(success)

}

fileToSplit <- read.csv("UPLOAD -- Sal Men-- TCES Move -- TSA Bed Data Template.csv")

splitDataAndWriteFiles(fileToSplit, 5000, "Sal_Men_NBN")