This article is part of a series.

View all 6 parts

- Part 1 – Setting up a Raspberry Pi NAS

- Part 2 – This Article

- Part 3 – Securely Expose Your Raspberry Pi 5 with Cloudflare Tunnel

- Part 4 – Nginx Reverse Proxy on Raspberry Pi (Ubuntu Server)

- Part 5 – Add HTTPS to Your Raspberry Pi with Nginx and Cloudflare

- Part 6 – Switch from Cloudflare Origin Certificates to Let’s Encrypt on Raspberry Pi with Cloudflare Tunnel

This guide walks through configuring a production-ready PostgreSQL server on a Raspberry Pi 5 using a Linux-distro-agnostic approach — suitable for Arch, Fedora, Ubuntu Server, and others.

1. Prerequisites

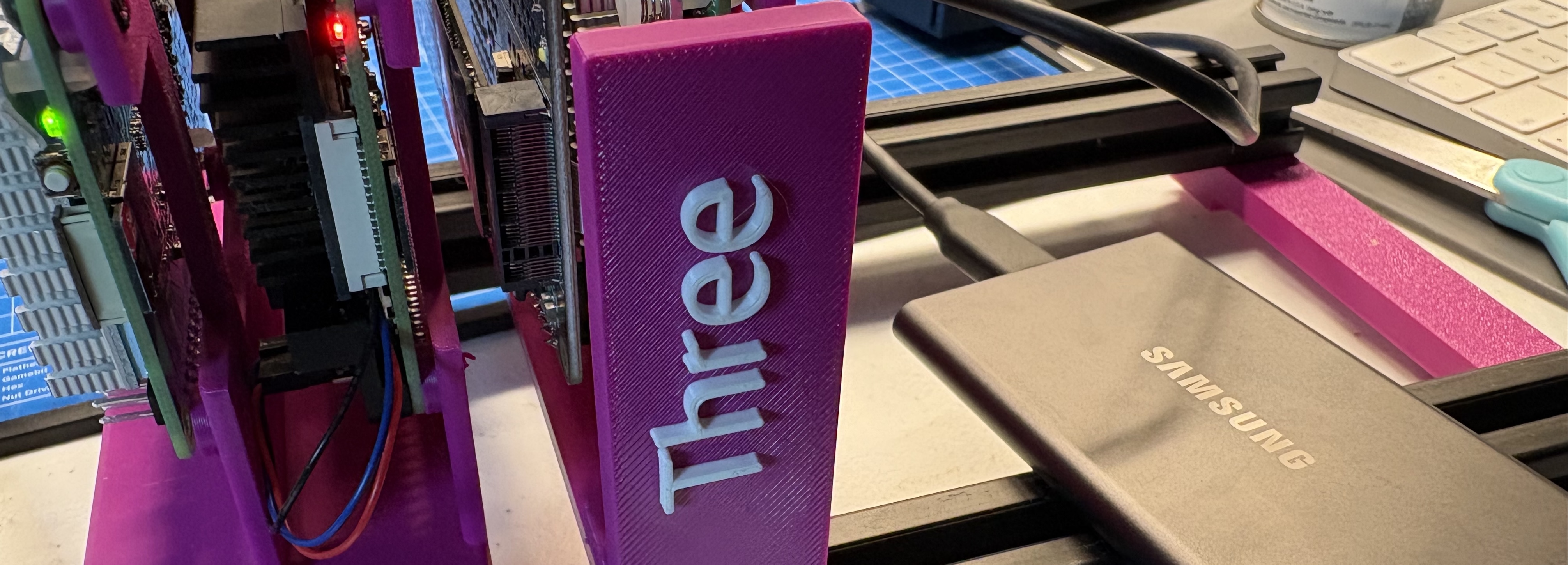

- Raspberry Pi 5 (4/8/16 GB RAM)

- 64-bit Linux (Ubuntu, Arch, Fedora, etc.)

- External SSD (recommended)

- Static IP address

- SSH access

2. System Preparation

Format and Mount External SSD

Identify the drive:

lsblk

(Optional) Partition and format the drive:

sudo fdisk /dev/nvme0n1 # Create partition (e.g., /dev/nvme0n1p1)

sudo mkfs.ext4 /dev/nvme0n1p1

Mount with

fstab

:

sudo blkid

Example output:

/dev/nvme0n1p1: UUID="abcd-1234" TYPE="ext4"

Then edit

/etc/fstab

:

UUID=abcd-1234 /mnt/datadrive ext4 defaults,nofail 0 2

Mount:

sudo mkdir -p /mnt/datadrive

sudo mount -a

3. Update System

sudo <package-manager> update && sudo <package-manager> upgrade -y

Replace

<package-manager>

with

apt

,

dnf

,

pacman

, etc.

4. Install PostgreSQL

Use your distro's package manager:

-

Debian/Ubuntu

:

bash sudo apt install postgresql -y -

Fedora/RHEL

:

bash sudo dnf install postgresql-server -y -

Arch

:

bash sudo pacman -S postgresql

5. Initialize and Configure PostgreSQL with a Custom Service

Stop and disable the default PostgreSQL cluster:

sudo systemctl stop postgresql@17-main

sudo systemctl disable postgresql@17-main

Initialize the database in a custom location:

Find your initdb path:

find /usr -name initdb 2>/dev/null

Then run:

sudo -u postgres /usr/lib/postgresql/17/bin/initdb -D /mnt/datadrive/postgresql/17/main

sudo chown -R postgres:postgres /mnt/datadrive/postgresql

Create a custom systemd service file:

sudo nano /etc/systemd/system/postgresql-custom.service

Paste the following:

[Unit]

Description=Custom PostgreSQL Service

After=network.target

[Service]

Type=forking

User=postgres

Environment=PGDATA=/mnt/datadrive/postgresql/17/main

ExecStart=/usr/lib/postgresql/17/bin/pg_ctl start -D /mnt/datadrive/postgresql/17/main -l /mnt/datadrive/postgresql/17/main/logfile

ExecStop=/usr/lib/postgresql/17/bin/pg_ctl stop -D /mnt/datadrive/postgresql/17/main

ExecReload=/usr/lib/postgresql/17/bin/pg_ctl reload -D /mnt/datadrive/postgresql/17/main

Restart=on-failure

[Install]

WantedBy=multi-user.target

Then enable and start:

sudo systemctl daemon-reload

sudo systemctl enable postgresql-custom

sudo systemctl start postgresql-custom

Verify it's working:

sudo -u postgres psql -c "SHOW data_directory;"

6. Create Databases and Users

Create your application users and databases first:

sudo -u postgres psql

Inside the shell:

-- Create users with secure passwords

CREATE USER lightdash WITH PASSWORD 'your_secure_lightdash_password';

CREATE USER self_sensored WITH PASSWORD 'your_secure_self_sensored_password';

-- Create databases owned by respective users

CREATE DATABASE lightdash OWNER lightdash;

CREATE DATABASE self_sensored OWNER self_sensored;

-- Grant necessary privileges

GRANT ALL PRIVILEGES ON DATABASE lightdash TO lightdash;

GRANT ALL PRIVILEGES ON DATABASE self_sensored TO self_sensored;

-- Optional: Create additional users for other applications

CREATE USER app_user WITH PASSWORD 'StrongSecurePass123';

CREATE DATABASE production_db OWNER app_user;

GRANT ALL PRIVILEGES ON DATABASE production_db TO app_user;

-- For FastAPI applications:

CREATE USER fastapi_user WITH PASSWORD 'your_fastapi_password';

CREATE DATABASE fastapi_db OWNER fastapi_user;

REVOKE ALL ON DATABASE fastapi_db FROM PUBLIC;

GRANT CONNECT ON DATABASE fastapi_db TO fastapi_user;

\c fastapi_db

GRANT USAGE, CREATE ON SCHEMA public TO fastapi_user;

ALTER DEFAULT PRIVILEGES IN SCHEMA public GRANT SELECT, INSERT, UPDATE, DELETE ON TABLES TO fastapi_user;

-- Exit psql

\q

Connection string examples:

postgresql+asyncpg://lightdash:your_secure_lightdash_password@<host>:5432/lightdash

postgresql+asyncpg://fastapi_user:your_fastapi_password@<host>:5432/fastapi_db

7. Tune for Performance

Edit:

sudo nano /mnt/datadrive/postgresql/17/main/postgresql.conf

Set performance parameters appropriate for Pi 5:

listen_addresses = '*'

# Memory settings (adjust based on your Pi 5 model)

# For 4GB Pi 5:

shared_buffers = 512MB

effective_cache_size = 3GB

# For 8GB Pi 5:

# shared_buffers = 1GB

# effective_cache_size = 6GB

# For 16GB Pi 5:

# shared_buffers = 2GB

# effective_cache_size = 12GB

# Other performance settings

work_mem = 8MB

maintenance_work_mem = 256MB

wal_level = replica

max_wal_size = 1GB

# Logging (optional but recommended)

log_connections = on

log_disconnections = on

log_line_prefix = '%t [%p]: [%l-1] user=%u,db=%d '

log_min_duration_statement = 1000

Restart to apply changes:

sudo systemctl restart postgresql-custom

8. Enable Remote Access

Find your pg_hba.conf file:

sudo -u postgres psql -c "SHOW hba_file;"

With a custom data directory, it will be:

sudo nano /mnt/datadrive/postgresql/17/main/pg_hba.conf

Replace the contents with this secure configuration:

I want my databases accessible anywhere on my local network, so I use a CIDR block for my home network. Adjust the IP range as needed.

# TYPE DATABASE USER ADDRESS METHOD

# Database administrative login by Unix domain socket

local all postgres peer

# Per user and database, accessible on local network

host lightdash lightdash 192.168.1.0/24 scram-sha-256

host self_sensored self_sensored 192.168.1.0/24 scram-sha-256

host production_db app_user 192.168.1.0/24 scram-sha-256

host fastapi_db fastapi_user 192.168.1.0/24 scram-sha-256

# Local TCP connections (for apps on the Pi itself)

host lightdash lightdash 127.0.0.1/32 scram-sha-256

host self_sensored self_sensored 127.0.0.1/32 scram-sha-256

host production_db app_user 127.0.0.1/32 scram-sha-256

host fastapi_db fastapi_user 127.0.0.1/32 scram-sha-256

# IPv6 local connections

host lightdash lightdash ::1/128 scram-sha-256

host self_sensored self_sensored ::1/128 scram-sha-256

# Deny all other connections

local all all reject

host all all 0.0.0.0/0 reject

host all all ::/0 reject

Restart PostgreSQL:

sudo systemctl restart postgresql-custom

Test connectivity:

# Test local connection

sudo -u postgres psql -c "SELECT version();"

# Test remote connection (from another machine on your network)

psql -h 192.168.1.X -U lightdash -d lightdash

9. Password Management and Cleanup

Secure the postgres superuser account:

sudo -u postgres psql

-- Set a strong password for the postgres superuser

ALTER USER postgres WITH PASSWORD 'NewSecurePostgresPass!';

-- List all users to verify

\du

-- Remove any unnecessary default users (if they exist)

-- DROP ROLE IF EXISTS old_user;

\q

10. Secure Your Server

Configure firewall to allow only local network access:

sudo ufw allow ssh

sudo ufw allow from 192.168.1.0/24 to any port 5432

sudo ufw enable

Or use

firewalld

or

iptables

depending on your distro:

# For firewalld (Fedora/RHEL)

sudo firewall-cmd --permanent --add-service=ssh

sudo firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='192.168.1.0/24' port protocol='tcp' port='5432' accept"

sudo firewall-cmd --reload

11. Setup Automatic Backups

Create a robust backup system with automatic rotation:

Create backup directory:

sudo mkdir -p /mnt/datadrive/db_backups

sudo chown postgres:postgres /mnt/datadrive/db_backups

Create the backup script:

sudo nano /usr/local/bin/pg_backup.sh

Add this content:

#!/bin/bash

# Configuration

BACKUP_DIR="/mnt/datadrive/db_backups"

DATE=$(date +%Y-%m-%d_%H-%M-%S)

RETENTION_DAYS=30

# Change to a safe working directory

cd /tmp

# Create backup directory if it doesn't exist

if ! mkdir -p "$BACKUP_DIR" 2>/dev/null; then

echo "$(date): ERROR - Cannot create backup directory $BACKUP_DIR" >&2

exit 1

fi

# Verify we can write to the backup directory

if ! touch "$BACKUP_DIR/test_write" 2>/dev/null; then

echo "$(date): ERROR - Cannot write to backup directory $BACKUP_DIR" >&2

exit 1

fi

rm -f "$BACKUP_DIR/test_write"

# Function to backup a single database

backup_database() {

local db_name=$1

local backup_file="$BACKUP_DIR/${db_name}_${DATE}.sql"

echo "$(date): Starting backup of $db_name"

# Check if database exists

if ! psql -lqt | cut -d \| -f 1 | grep -qw "$db_name"; then

echo "$(date): WARNING - Database $db_name does not exist, skipping"

return 0

fi

if pg_dump --clean --no-owner --no-privileges "$db_name" > "$backup_file" 2>/dev/null; then

# Compress the backup

if gzip "$backup_file"; then

echo "$(date): Successfully backed up $db_name to ${backup_file}.gz"

else

echo "$(date): ERROR - Failed to compress backup for $db_name" >&2

return 1

fi

else

echo "$(date): ERROR - Failed to backup $db_name" >&2

return 1

fi

}

# Backup specific databases

backup_database "lightdash"

backup_database "self_sensored"

backup_database "production_db"

backup_database "fastapi_db"

# Optional: Backup all databases

# pg_dumpall --clean > "$BACKUP_DIR/all_databases_${DATE}.sql"

# gzip "$BACKUP_DIR/all_databases_${DATE}.sql"

# Clean up old backups (older than RETENTION_DAYS)

if [ -d "$BACKUP_DIR" ]; then

find "$BACKUP_DIR" -name "*.sql.gz" -type f -mtime +$RETENTION_DAYS -delete 2>/dev/null

echo "$(date): Cleaned up backups older than $RETENTION_DAYS days"

fi

echo "$(date): Backup process completed"

Make the script executable:

sudo chmod +x /usr/local/bin/pg_backup.sh

Test the backup script:

sudo -u postgres /usr/local/bin/pg_backup.sh

Verify backups were created:

ls -la /mnt/datadrive/db_backups/

Schedule automatic backups:

Edit the postgres user's crontab:

sudo -u postgres crontab -e

Add this line for daily backups at 2 AM:

0 2 * * * /usr/local/bin/pg_backup.sh >> /var/log/pg_backup.log 2>&1

Create log rotation for backup logs:

sudo nano /etc/logrotate.d/pg_backup

Add:

/var/log/pg_backup.log {

weekly

rotate 12

compress

delaycompress

missingok

notifempty

create 644 postgres postgres

}

Test backup restoration:

A lot of engineers would consider this an optional step, however, I strongly recommend testing your backup restoration process to ensure everything works as expected. Nothing like counting on a backup system that does not work. 😬

Create some test data first:

# Add sample data to test the backup process

sudo -u postgres psql lightdash -c "

CREATE TABLE IF NOT EXISTS test_backup (

id SERIAL PRIMARY KEY,

name VARCHAR(50),

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

INSERT INTO test_backup (name) VALUES ('Backup Test');

"

Run a fresh backup:

sudo -u postgres /usr/local/bin/pg_backup.sh

Test restoration:

# Create test database

sudo -u postgres createdb test_restore

# Restore from backup (use the most recent backup)

sudo -u postgres bash -c "gunzip -c /mnt/datadrive/db_backups/lightdash_$(date +%Y-%m-%d)*.sql.gz | psql test_restore"

# Verify restoration worked

sudo -u postgres psql test_restore -c "\dt"

sudo -u postgres psql test_restore -c "SELECT * FROM test_backup;"

# Clean up test database

sudo -u postgres dropdb test_restore

12. Logging & Monitoring

Monitor your PostgreSQL server health:

View current connections:

sudo -u postgres psql -c "SELECT * FROM pg_stat_activity WHERE state = 'active';"

Check database sizes:

sudo -u postgres psql -c "

SELECT

datname as database,

pg_size_pretty(pg_database_size(datname)) as size

FROM pg_database

WHERE datname NOT IN ('template0', 'template1', 'postgres')

ORDER BY pg_database_size(datname) DESC;

"

Monitor backup health:

# Check recent backup logs

tail -f /var/log/pg_backup.log

# Verify recent backups exist

ls -lah /mnt/datadrive/db_backups/ | head -10

Enable detailed logging (optional):

Add to

/mnt/datadrive/postgresql/17/main/postgresql.conf

:

# Additional logging options

log_statement = 'mod' # Log all modifications

log_checkpoints = on

log_lock_waits = on

log_temp_files = 0

13. Restore from Backup

To restore from a backup:

# For a specific database

sudo -u postgres bash -c "gunzip -c /mnt/datadrive/db_backups/lightdash_2025-06-07.sql.gz | psql lightdash"

# For all databases (if you created a full backup)

sudo -u postgres bash -c "gunzip -c /mnt/datadrive/db_backups/all_databases_2025-06-07.sql.gz | psql"

This setup is distro-agnostic, scriptable, and portable across bare metal or container deployments. Ideal for home labs and production-ready apps alike.

Additional Production Considerations

For even more robust production deployments, consider:

- Point-in-time recovery with WAL archiving

- Replication to a secondary Pi for high availability

- Connection pooling with PgBouncer

- Monitoring with Prometheus and Grafana

- SSL/TLS encryption for remote connections

- Regular security updates and vulnerability scanning